Infinite-Length Streaming Architecture in InfiniteTalk

How Infinite Talk AI scales sparse-frame video dubbing to practically infinite sequences.

1. Why long-form dubbing is hard

Most audio-driven video models work well on short clips, but start to break down on long sequences:

- Identity drift — Faces slowly change shape, skin tone shifts, and backgrounds morph over time.

- Style and color drift — Each segment drifts in color and contrast, making the video look like it was shot on different cameras.

- Visible seams between segments — Models that generate in fixed-length chunks often produce hard cuts in motion: head pose snaps, gestures reset, and continuity is lost.

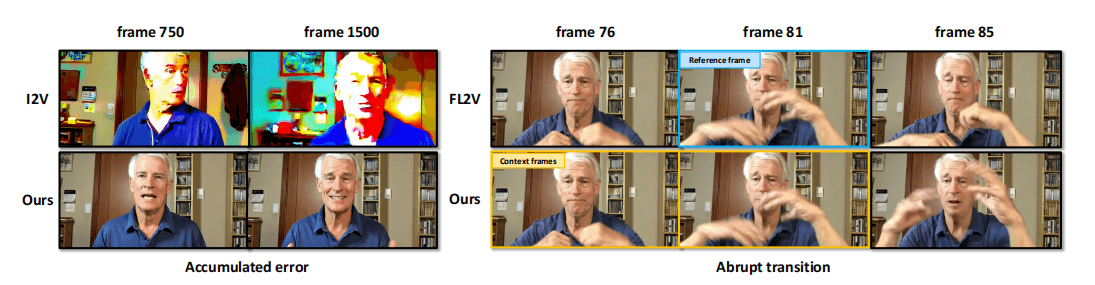

Naive solutions behave like this:

Plain audio-driven I2V

- Starts from a single reference frame and repeatedly rolls forward.

- Motion is free, but identity and scene drift accumulate.

First–Last-frame-constrained I2V (FL2V)

- Forces each chunk to match a fixed first and last frame.

- Prevents drift, but motion becomes stiff: the model copies poses instead of acting out the audio.

The core challenge:

How do we support long or even "infinite" sequences without sacrificing natural, audio-driven motion?

2. The streaming design of InfiniteTalk

InfiniteTalk is built as a streaming audio-driven video generator specifically for long-form dubbing.

Its architecture is based on two ideas:

- Chunked generation

- The video is divided into fixed-length chunks (e.g., 81 frames per block).

- The model generates each chunk in sequence.

- Context frames + reference frames

- Context frames: a short history of previously generated frames that carries motion momentum forward.

- Reference frames: sparsely sampled keyframes from the original video that anchor identity, background, and camera trajectory.

This combination lets InfiniteTalk:

- Use context frames to keep motion continuous across chunks.

- Use reference frames to prevent identity and style drift over long durations.

3. Context frames: keeping motion continuous

3.1 What are context frames?

In InfiniteTalk, each chunk does not start from scratch.

Instead, when generating chunk t, the model also sees a short slice of frames from the end of chunk t–1. These frames are called context frames.

- They are taken from already generated video.

- They are re-encoded by the video VAE into latent space.

- They are fed into the diffusion Transformer together with the new audio and reference frames.

Intuition:

If the previous chunk ended with the character raising their head, the next chunk should start from that raised-head pose and continue naturally — not suddenly snap back to a neutral position.

3.2 Example configuration

In the current setup (you can simplify details in the UI, keep them here for technical readers):

- A video is encoded into a latent sequence by a video VAE.

- Each chunk covers 81 frames total.

- The model keeps a latent context length

tc(for example, 3 latent frames derived from 9 context images). - For each step, InfiniteTalk generates 72 new frames, which are appended after the context frames.

Visually, you can imagine:

[Frames 1–81][Context (Frames 73–81)] + [New Frames 82–153][Context (Frames 145–153)] + [New Frames 154–225]Context frames make motion across chunks feel like one continuous take, rather than a set of stitched clips.

4. Reference frames: preventing drift in identity and camera

Context alone only solves continuity; it doesn't guarantee that:

- The actor still looks like themselves.

- The background and camera style match the original footage.

To address this, InfiniteTalk also uses reference frames sampled from the source video:

- These are sparse keyframes selected from the original clip.

- They are encoded into latent features and fed to the diffusion Transformer.

- They act as soft anchors for:

- Face identity

- Clothing and lighting

- Background layout

- Global camera trajectory

During generation, each chunk is conditioned on:

- The dubbed audio for that time range

- The context frames from previous output

- One or more reference frames sampled according to a keyframe strategy

This is what allows InfiniteTalk to sustain:

- Consistent characters across minutes of video

- Stable backgrounds and camera motion

- A coherent visual style, even as the audio and body motion evolve

Figure 5: Visualization of reference frame conditioning strategies for video dubbing models. Top four rows: conditioning on input video frames. Bottom row: conditioning on generated video frames. Left: Image-to-video dubbing model with initial frame conditioning (I2V) and initial+terminal frame conditioning (IT2V). Right: Streaming dubbing model with four conditioning strategies. Within each category (left/right), all strategies share identical generated-video conditioning approaches.

(Note: detailed strategies M0–M3 for reference placement are covered in soft-reference-control.)

5. I2V vs FL2V vs InfiniteTalk: architecture comparison

To make the design trade-offs clear, here is a simple comparison:

| Method | Conditions used | Context frames | Long-form issues | Best suited for |

|---|---|---|---|---|

| Plain I2V | Single reference image + previous frame | ❌ | Identity and style drift, motion instability | Short demos, toy examples |

| FL2V | First + last frame per chunk | ❌ | Pose snapping at boundaries, rigid motion | Medium clips with simple motion |

| InfiniteTalk | Sparse reference frames + context + audio | ✅ | Smooth motion, stable identity and camera over time | Long-form dubbing, episodes |

6. From theory to product: how Infinite Talk AI handles long videos

On the product side (Infinite Talk AI), the streaming architecture is used roughly like this:

Per-pass limit

- For practical compute and UX reasons, a single render pass may be capped (for example at ~600 seconds of output).

Chunking and scheduling

- Long audio + source video are segmented into chunks that align with the model's preferred length.

- Each chunk:

- Receives its local audio segment.

- Uses context frames from the end of the previous chunk.

- Shares a pool of reference frames sampled from the full source video.

Stitching

- Generated chunks are concatenated along the timeline.

- Because of overlapping context and soft reference control, seams at chunk boundaries are visually minimal.

In practice, this allows Infinite Talk AI to support:

- Chapter-based workflows (e.g., segmenting a training course or a talk into natural sections).

- Hour-scale programs composed of multiple passes.

- Streaming-style pipelines where content is dubbed in batches but feels like one continuous performance.

7. Why this streaming architecture matters

To summarize:

Naive audio-driven I2V

- Pros: free motion.

- Cons: accumulates drift in identity, style, and background over long durations.

FL2V with hard frame constraints

- Pros: fixes identity drift.

- Cons: introduces pose snapping and stiff motion at chunk boundaries.

InfiniteTalk's streaming architecture combines the strengths without inheriting the weaknesses:

Context frames

Carry motion momentum forward, keeping gestures and head motion continuous across chunks.

Sparse reference frames

Anchor identity, background, and camera trajectory throughout the sequence.

Chunked, audio-driven generation

Scales to virtually unlimited length, while still letting the model act out the dubbed audio naturally.

For creators, this means you can:

- Dub long videos and episodic content without characters "melting" or "resetting" between segments.

- Maintain a consistent visual identity and camera style across an entire series.

- Deliver dubbing that feels like a single take, not a patchwork of disconnected clips.

If you'd like to understand how reference placement and control strength are tuned in InfiniteTalk, continue with:

Soft Reference Control